Last year, when I first tried out an “emotionally intelligent” AI customer service bot, I was impressed by how it seemed to pick up on my frustration. The voice became softer, the pace of the delivery slowed, and I even felt calmness wash over me. Then a question dawned on me: what did I just give away with these emotions?

This is more than just chatbots making polite chatter. The fact of the matter is that emotional data—smiles, frowns, and tremors in our voice—doesn’t just freakin’ disappear after it is collected. It is stored, it is categorized, and, in many instances, it is recycled to train AI systems so that they can deploy future, actionable interactions.

Today, I will take you into the world of emotionally recycled AI: what it is, how it works, why it matters, and what it might mean for privacy, ethics, and even human rights implications.

What Is Emotional AI?

Emotional AI (aka affective computing or emotional artificial intelligence) is a branch of AI that focuses on the development of systems that are able to recognize, interpret, process, and simulate human emotions.

Emotional AI utilizes advanced technologies like machine learning, natural language processing (NLP), and computer vision to interpret human emotional responses from many inputs, such as facial expressions, the intonation of a voice, and/or physiological signals.

What does it mean for AI to “recycle emotional data”?

At a fundamental level, emotional AI captures signals like

- Facial expressions (micro-expressions that last less than half a second)

- Vocal tone (stress signals, hesitation, enthusiasm)

- Physiological cues (heart rate from wearables, dilation of pupils, and body heat)

Now here’s the twist: these signals are not used once and thrown away. They are saved and put to use again in different contexts. To think of it like AI having a library of emotions, your sadness today could help the AI provide some indication of sadness in someone else tomorrow.

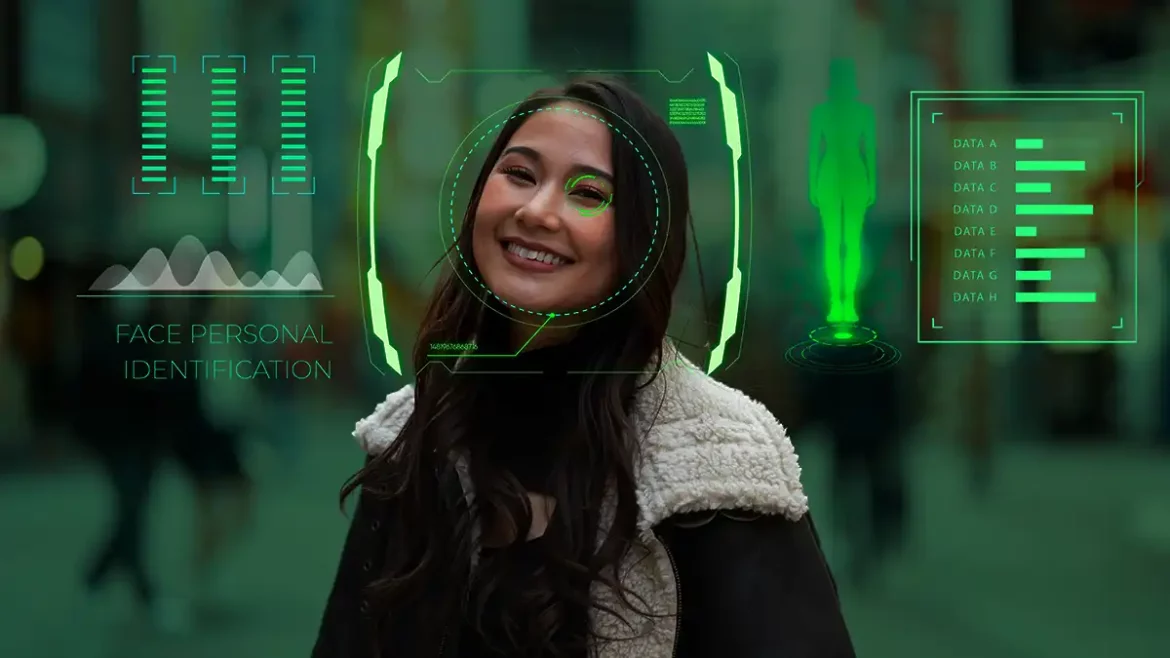

Facial Recognition and Emotion Recycling

This is the exciting—and unsettling—part of the conversation.

Companies use facial recognition and read emotional signals to deliver targeted ads, monitor employees, and even use it in law enforcement. Let’s say you are watching a video ad; captured in the video is a brief smile. That micro-expression could be captured and trained, and an algorithm could be used to recognize other smiles in the future from potentially thousands of strangers.

Your emotional fingerprint is now part of a global pool, lost from its original context, and subsequently “recycled” among industries.

How Emotional Is Data Reused in AI?

| Source of Emotion | How It Is Recycled | Potential Risks |

| Facial recognition | Shared across ad targeting platforms | Misuse in surveillance and profiling |

| Voice tone analysis | Applied to call centers for customer service training | Manipulation of moods |

| Online interactions | Used to predict consumer purchase intent | Emotional exploitation in marketing |

| Wearable sensors | Fed into fitness and healthcare apps | Breach of health privacy |

The Ethical Challenges of AI Emotion Recycling

This is where I stop. I am geeky enough to appreciate the ostensible capabilities of any of the technologies behind this. But as a human being, I need to point out the ethical issues:

- Consent: Most people are not aware that their emotion data potentially lives on outside of their engagement.

- Bias: Emotion cues vary across cultures, yet their recycled emotion data often ignore all context.

- Exploitation: If your stress response is extracted and recycled into a sales algorithm, are you nudged into decisions you never would usually make?

Who Owns the Emotional Data in AI?

I asked someone who works in data ethics this very question, and his answer was, “At this moment? Nobody, which is the problem.“

Ownership of emotional data is still undefined:

- Companies claim they own it because their technology extracted it.

- Users usually believe they own it because it embodies their emotional state.

- Governments? They are still catching up.

Therefore, who owns the emotional data in AI? Legally, it’s blurry. Morally, I believe we do. Because your love, sadness, and fear are not data points—they are human experiences.

Emotional AI and Human Rights

This is where the conversation goes global. Organizations like the UN and Amnesty International are asking whether emotional AI could infringe on human rights.

Consider:

- Freedom of thought might be compromised if emotions are not only tracked but also surveilled continuously.

- The right to privacy is trampled when your emotions are recycled and reused without your consent.

- Emotional manipulation edges toward distorting free will in the first place.

Manipulation Risks of Recycled Emotions

This is the part that honestly frightens me. If AI can regurgitate emotions, it can also design responses to elicit a targeted outcome.

Examples include:

- Shopping apps that utilize recycled states of stress to schedule “flash sales.”

- Tools used in the workplace that give prompts of “calm tones” to employees as they work towards deadlines.

- Political campaigns that force-feed fear messages.

Recycled emotions can be used as persuasive weapons that are subtly effective in influencing outcomes without the target audience even realizing they are being persuaded.

Privacy in Emotional Artificial Intelligence

Let’s discuss privacy—the aspect most of us think we understand but actually don’t.

Unlike regular data (like your search history), emotional data is personal. It not only tells what you did and when, but also how you felt while doing it. That is a different level of surveillance.

Currently, there are very few regulations that specifically deal with emotional privacy. In Europe, GDPR has language concerning biometric data, and emotional data is not explicitly included. This is a significant loophole.

Where Do We Go From Here?

The big question: Should emotional data even be recyclable?

I don’t have an easy answer, but I can share my perspective. When I was using that emotion-aware chatbot, part of me enjoyed the seamless experience. But part of me wondered if I was being comforted or observed.

As AI progresses, we will have a choice to make:

- We can either look at emotional data as a renewable fuel source for innovative processes,

- Or treat emotional data as sacred human expression that deserves protection.

Trends to Keep an Eye on for 2025 and Beyond

I have three trends that I am actively tracking:

Emotional Data Regulation

Countries are going to draft new regulations, like GDPR, but focused on emotions.

Ethical AI Labels

Companies may be able to advertise “non-recycled” emotional AI, just like organic food.

Owner-Controlled Emotional Vaults

Apps are going to change how you can store, share, or delete your emotional data.

My Final Thoughts

The exciting and frightening thing about AI is its ability to use emotional data for multiple purposes. It can enhance experiences, predict wants or needs, and comfort us when we sit in uncomfortable places—but it can also exploit, manipulate, and invade our most private inner world.

The next time a chatbot asks, “How are you feeling today?” Take a moment to think because your answer may live on, recycled into a future interaction that you never intended.

FAQs (Frequently Asked Questions)

What is facial recognition and emotion recycling in AI?

Ans: Facial recognition is a system that can assess emotions, while emotion recycling is when these emotional responses are recycled to train AI to predict humans’ feelings and responses better.

What are the ethical concerns of AI emotion recycling?

Ans: Ethical concerns include consent, the potential of bias with recycled emotions, and hurting anonymity if personal emotions are not anonymized. If AI is allowed to extract and exploit emotions without regulation, it could easily become a form of emotional manipulation.

Who Owns Emotional Data in AI?

Ans: This is a gray area, as while humans produce emotional responses, the companies that collect and process the data own the data, despite these emotional responses belonging to humans.

How does emotional AI relate to human rights?

Ans: Emotional AI directly connects to issues of privacy, autonomy, and dignity, and once again, if emotional AI is exploited to diminish autonomy or to infringe on basic human rights by surveilling individuals or judging people’s actions and suitability on emotional responses, these would be violations of basic human rights.

What are the risks associated with manipulating recycled emotions?

Ans: Recycled emotional data could be used to compromise buying habits, political orientations, social interactions, etc. This data is prone to manipulation at a mass psychological level.

How is privacy protected in emotional artificial intelligence?

Ans: Again, privacy protection to prevent exploitation or exposure of sensitive emotional data requires good regulation and transparency, and users’ consent.

Trending Blog: From E-commerce to Education: Applications of Digital Smell Technology